Abstract

We develop a new method for deriving local laws for a large class of random matrices. It is applicable to many matrix models built from sums and products of deterministic or independent random matrices. In particular, it may be used to obtain local laws for matrix ensembles that are anisotropic in the sense that their resolvents are well approximated by deterministic matrices that are not multiples of the identity. For definiteness, we present the method for sample covariance matrices of the form  , where T is deterministic and X is random with independent entries. We prove that with high probability the resolvent of Q is close to a deterministic matrix, with an optimal error bound and down to optimal spectral scales. As an application, we prove the edge universality of Q by establishing the Tracy–Widom–Airy statistics of the eigenvalues of Q near the soft edges. This result applies in the single-cut and multi-cut cases. Further applications include the distribution of the eigenvectors and an analysis of the outliers and BBP-type phase transitions in finite-rank deformations; they will appear elsewhere. We also apply our method to Wigner matrices whose entries have arbitrary expectation, i.e. we consider \(W+A\) where W is a Wigner matrix and A a Hermitian deterministic matrix. We prove the anisotropic local law for \(W+A\) and use it to establish edge universality.

, where T is deterministic and X is random with independent entries. We prove that with high probability the resolvent of Q is close to a deterministic matrix, with an optimal error bound and down to optimal spectral scales. As an application, we prove the edge universality of Q by establishing the Tracy–Widom–Airy statistics of the eigenvalues of Q near the soft edges. This result applies in the single-cut and multi-cut cases. Further applications include the distribution of the eigenvectors and an analysis of the outliers and BBP-type phase transitions in finite-rank deformations; they will appear elsewhere. We also apply our method to Wigner matrices whose entries have arbitrary expectation, i.e. we consider \(W+A\) where W is a Wigner matrix and A a Hermitian deterministic matrix. We prove the anisotropic local law for \(W+A\) and use it to establish edge universality.

Similar content being viewed by others

Notes

This means the density of the absolutely continuous part of \(\varrho \). In fact, as explained after Lemma 4.10 below, \(\varrho \) is always absolutely continuous on \(\mathbb {R}{\setminus } \{0\}\).

References

Bai, Z., Silverstein, J.W.: No eigenvalues outside the support of the limiting spectral distribution of large-dimensional sample covariance matrices. Ann. Probab. 26, 316–345 (1998)

Bai, Z., Silverstein, J.W.: Exact separation of eigenvalues of large dimensional sample covariance matrices. Ann. Probab. 27, 1536–1555 (1999)

Bai, Z., Silverstein, J .W.: Spectral Analysis of Large Dimensional Random Matrices. Springer, New York (2010)

Bao, Z., Erdős, L., Schnelli, K.: Local law of addition of random matrices on optimal scale. arXiv:1509.07080 (Preprint)

Bao, Z., Pan, G., Zhou, W.: Universality for the largest eigenvalue of a class of sample covariance matrices. arXiv:1304.5690v4 (Preprint)

Benaych-Georges, F.: Local single ring theorem. arXiv:1501.07840 (Preprint)

Bloemendal, A., Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Isotropic local laws for sample covariance and generalized Wigner matrices. Electron. J. Probab. 19, 1–53 (2014)

Bloemendal, A., Knowles, A., Yau, H.-T., Yin, J.: On the principal components of sample covariance matrices. Prob. Theory Relat. Fields 164(1), 459–552 (2016)

Capitaine, M., Péché, S.: Fluctuations at the edges of the spectrum of the full rank deformed GUE. Prob. Theory Relat. Fields 165(1), 117–161 (2016)

Chatterjee, S.: A generalization of the Lindeberg principle. Ann. Probab. 34, 2061–2076 (2006)

El Karoui, N.: Tracy–Widom limit for the largest eigenvalue of a large class of complex sample covariance matrices. Ann. Probab. 35, 663–714 (2007)

Erdős, L., Knowles, A., Yau, H.-T.: Averaging fluctuations in resolvents of random band matrices. Ann. H. Poincaré 14, 1837–1926 (2013)

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Spectral statistics of Erdős–Rényi graphs II: eigenvalue spacing and the extreme eigenvalues. Commun. Math. Phys. 314, 587–640 (2012)

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: The local semicircle law for a general class of random matrices. Electron. J. Probab. 18, 1–58 (2013)

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Spectral statistics of Erdős–Rényi graphs I: local semicircle law. Ann. Probab. 41, 2279–2375 (2013)

Erdős, L., Schlein, B., Yau, H.-T.: Local semicircle law and complete delocalization for Wigner random matrices. Commun. Math. Phys. 287, 641–655 (2009)

Erdős, L., Schlein, B., Yau, H.-T.: Universality of random matrices and local relaxation flow. Invent. Math. 185(1), 75–119 (2011)

Erdős, L., Schlein, B., Yau, H.-T., Yin, J.: The local relaxation flow approach to universality of the local statistics of random matrices. Ann. Inst. Poincaré (B) 48, 1–46 (2012)

Erdős, L., Yau, H.-T., Yin, J.: Bulk universality for generalized Wigner matrices. Probab. Theory Relat. Fields 154, 341–407 (2012)

Erdős, L., Yau, H.-T., Yin, J.: Rigidity of eigenvalues of generalized Wigner matrices. Adv. Math. 229, 1435–1515 (2012)

Hachem, W., Hardy, A., Najim, J.: Large complex correlated Wishart matrices: fluctuations and asymptotic independence at the edges. arXiv:1409.7548 (Preprint)

Hachem, W., Loubaton, P., Najim, J., Vallet, P.: On bilinear forms based on the resolvent of large random matrices. Ann. Inst. H. Poincaré (B) 49, 36–63 (2013)

Johansson, K.: From Gumbel to Tracy–Widom. Probab. Theory Relat. Fields 138, 75–112 (2007)

Kargin, V.: Subordination for the sum of two random matrices. Ann. Probab. 43, 2119–2150 (2015)

Knowles, A., Yin, J.: The isotropic semicircle law and deformation of Wigner matrices. Commun. Pure Appl. Math. 66, 1663–1749 (2013)

Lee, J.O., Schnelli, K.: Edge universality for deformed Wigner matrices. Rev. Math. Phys. 27, 1550018 (2015)

Lee, J.O., Schnelli, K.: Tracy–Widom distribution for the largest eigenvalues of real sample covariance matrices with general population. arXiv:1409.4979 (Preprint)

Lytova, A., Pastur, L.: Central limit theorem for linear eigenvalue statistics of random matrices with independent entries. Ann. Probab. 37, 1778–1840 (2009)

Marchenko, V.A., Pastur, L.A.: Distribution of eigenvalues for some sets of random matrices. Math. Sb. 72, 457–483 (1967)

Onatski, A.: The Tracy–Widom limit for the largest eigenvalues of singular complex Wishart matrices. Ann. Appl. Probab. 18, 470–490 (2008)

Pastur, L.A.: On the spectrum of random matrices. Theor. Math. Phys. 10, 67–74 (1972)

Pillai, N.S., Yin, J.: Universality of covariance matrices. Ann. Appl. Probab. 24(3), 935–1001 (2014)

Shcherbina, T.: On universality of bulk local regime of the deformed Gaussian unitary ensemble. Math. Phys. Anal. Geom. 5, 396–433 (2009)

Silverstein, J.W., Bai, Z.: On the empirical distribution of eigenvalues of a class of large dimensional random matrices. J. Multivar. Anal. 54, 175–192 (1995)

Silverstein, J.W., Choi, S.-I.: Analysis of the limiting spectral distribution of large dimensional random matrices. J. Multivar. Anal. 54, 295–309 (1995)

Tao, T., Vu, V.: Random matrices: universality of local eigenvalue statistics. Acta Math. 206, 1–78 (2011)

Tracy, C., Widom, H.: Level-spacing distributions and the Airy kernel. Commun. Math. Phys. 159, 151–174 (1994)

Tracy, C., Widom, H.: On orthogonal and symplectic matrix ensembles. Commun. Math. Phys. 177, 727–754 (1996)

Wigner, E.P.: Characteristic vectors of bordered matrices with infinite dimensions. Ann. Math. 62, 548–564 (1955)

Acknowledgments

A.K. was partially supported by Swiss National Science Foundation grant 144662 and the SwissMAP NCCR grant. J.Y. was partially supported by NSF Grant DMS-1207961. We are very grateful to the Institute for Advanced Study, Thomas Spencer, and Horng-Tzer Yau for their kind hospitality during the academic year 2013-2014. We also thank the Institute for Mathematical Research (FIM) at ETH Zürich for its generous support of J.Y.’s visit in the summer of 2014. We are indebted to Jamal Najim for stimulating discussions.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Properties of \(\varrho \) and Stability of (2.11)

Appendix A: Properties of \(\varrho \) and Stability of (2.11)

This appendix is devoted the proofs of the basic properties of \(\varrho \) and the stability of (2.11) in the sense of Definition 5.4. In this appendix we abbreviate  .

.

1.1 A.1. Properties of \(\varrho \) and proof of Lemmas 2.4–2.6

In this subsection we establish the basic properties of \(\varrho \) and prove Lemmas 2.4–2.6.

Proof of Lemma 2.4

We have

From (A.1) we find that \((x^3 f''(x))' > 0\) on \(I_0 \cup \cdots \cup I_n\). Therefore for \(i = 2, \ldots , n\) there is at most one point \(x \in I_i\) such that \(f''(x) = 0\). We conclude that \(I_i\) has at most two critical points of f. Using the boundary conditions of f on \(\partial I_i\), we conclude the proof of \(|{\mathcal {C}} \cap I_i | \in \{0,2\}\) for \(i = 2, \ldots n\).

From (A.1) we also find that \((x^2 f'(x))' < 0\) for \(x \in I_1\). We conclude that there exists at most one point \(x \in I_1\) such that \(f'(x) = 0\). Using the boundary conditions of \(f'\) on \(\partial I_1\), we deduce that \(|{\mathcal {C}} \cap I_1 | = 1\).

Finally, if \(\phi \ne 1\) we have \(f(x) = (\phi - 1) x^{-1} + O(x^{-2})\) as \(x \rightarrow \infty \). From the boundary conditions of f on \(\partial I_0\) we therefore deduce that \(|{\mathcal {C}} \cap I_0 | = 1\). Moreover, if \(\phi = 1\) we find from (A.1) that \(f'(x) \ne 0\) for all \(x \in I_0 {\setminus } \{\infty \}\). This concludes the proof. \(\square \)

By multiplying both sides of the equation \(z = f(m)\) in (2.11) with the product of all denominators on the right-hand side of (2.12), we find that \(z = f(m)\) may be also written as \(P_z(m) = 0\), where \(P_z\) is a polynomial of degree \(n+1\), whose coefficients are affine linear functions of z. (Here we used that all \(s_i\) are distinct and that \(m + s_i^{-1} \ne 0\).) This polynomial characterization of m is useful for the proofs of Lemmas 2.5 and 2.6.

From Lemma 4.10 we find that the measure \(\varrho \) has a bounded density in \([\tau ,\infty )\) for any fixed \(\tau > 0\). Hence, we may extend the definition of m down to the real axis by setting  .

.

Proof of Lemma 2.5

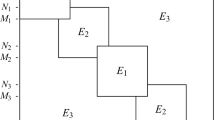

For \(i = 0, \ldots , n\) define the subset  . The graph of f restricted to \(J_0 \cup \cdots \cup J_n\) is depicted in red in Figs. 2 and 3. From Lemma 2.4 we deduce that if \(i = 2, \ldots , n\) then \(J_i \ne \emptyset \) if and only if \(I_i\) contains two distinct critical points of f, in which case \(J_i\) is an interval. Moreover, we always have \(J_1 \ne \emptyset \ne J_0\).

. The graph of f restricted to \(J_0 \cup \cdots \cup J_n\) is depicted in red in Figs. 2 and 3. From Lemma 2.4 we deduce that if \(i = 2, \ldots , n\) then \(J_i \ne \emptyset \) if and only if \(I_i\) contains two distinct critical points of f, in which case \(J_i\) is an interval. Moreover, we always have \(J_1 \ne \emptyset \ne J_0\).

Next, we observe that for any \(0 \leqslant i < j \leqslant n\) we have \(f(J_i) \cap f(J_j) = \emptyset \). Indeed, if there were \(E \in f(J_i) \cap f(J_j)\) then we would have  (see Fig. 2). Since \(f(x) = E\) is equivalent to \(P_E(x) = 0\) and \(P_E\) has degree \(n+1\), we arrive at the desired contradiction. We conclude that the sets \(f(J_i)\), \(0 \leqslant i \leqslant n\), may be strictly ordered.

(see Fig. 2). Since \(f(x) = E\) is equivalent to \(P_E(x) = 0\) and \(P_E\) has degree \(n+1\), we arrive at the desired contradiction. We conclude that the sets \(f(J_i)\), \(0 \leqslant i \leqslant n\), may be strictly ordered.

The claim \(a_1 \geqslant a_2 \geqslant \cdots \geqslant a_{2p}\) may now be reformulated as

In order to show (A.2), we use a continuity argument in \(\phi \). Let \(t \in (0,1]\) and denote by \(f^t\) the right-hand side of (2.12) with \(\phi \) is replaced by \(t \phi \). We use the superscript t to denote quantities defined using \(f^t\) instead of \(f = f^1\). Using directly the definition of \(f^t\), it is easy to check that (A.2) holds for small enough \(t > 0\).

We focus on the first inequality of (A.2); the proof of the second one is similar. We have to show that \(f(J_i) > f(J_j)\) whenever \(1 \leqslant i < j \leqslant n\) and \(J_i \ne \emptyset \ne J_j\). First, we claim that for \(1 \leqslant i \leqslant n\) we have

To prove (A.3), we remark that if \(2 \leqslant i \leqslant n\) then \(J_i^t \ne \emptyset \) is equivalent to \(I_i\) containing two distinct critical points. Moreover, \(\partial _t \partial _x f^t(x) < 0\) in \(I_2 \cup \cdots \cup I_n\), from which we deduce that the number of distinct critical points in each \(I_i\), \(i = 2, \ldots , n\), does not decrease as t decreases. Recalling that \(I_1^t \ne \emptyset \), we deduce (A.3).

Next, suppose that there exist \(1 \leqslant i < j \leqslant n\) satisfying \(J_i \ne \emptyset \ne J_j\) and \(f(J_i) < f(J_j)\). From (A.3) we deduce that \(J_i^t \ne \emptyset \ne J_j^t\) for all \(t \in (0,1]\). Moreover, by a simple continuity argument and the fact that for each t we have either \(f^t(J_i^t) < f^t(J_j^t)\) or \(f^t(J_i^t) > f^t(J_j^t)\), we conclude that \(f^t(J_i^t) < f^t(J_j^t)\) for all \(t \in (0,1]\). As explained after (A.2), this is impossible for small enough \(t > 0\). This concludes the proof of (A.2), and hence also of the first sentence of Lemma 2.5.

Next, we prove the second sentence of Lemma 2.5. Suppose that \(\phi \ne 1\) and that \(E \ne 0\) lies in the (open) set \(\bigcup _{i = 0}^n f(J_i) = f \bigl ({\bigcup _{i = 0}^n J_i}\bigr )\). Then the set  has \(n+1\) points. Recall that m(E) is a solution of \(P_{E}(m(E)) = 0\). Since \(n+1 = \deg P_E\), we deduce that m(E) is real. Since m is the Stieltjes transform of \(\varrho \), we conclude that \(E \notin \mathrm{supp}\, \varrho \) and that \(m'(E) > 0\). This means that \(m \vert _{f(J_i)}\) is increasing, and is therefore the inverse function of \(f \vert _{J_i}\). We conclude that f is a bijection from \(\bigcup _{i = 0}^n J_i\) onto \(\bigcup _{i = 0}^n f(J_i)\) with inverse function m (here we use the convention that for \(\phi \leqslant 1\) we have \(m(0) = \infty \) and \(f(\infty ) = 0\)). By definition, we have \(a_k = f(x_k)\) and the inverse relation \(x_k = m(a_k)\) follows by a simple limiting argument from \(\mathbb {C}_+\) to \(\mathbb {R}\). The case \(\phi = 1\) is handled similarly; we omit the minor modifications.

has \(n+1\) points. Recall that m(E) is a solution of \(P_{E}(m(E)) = 0\). Since \(n+1 = \deg P_E\), we deduce that m(E) is real. Since m is the Stieltjes transform of \(\varrho \), we conclude that \(E \notin \mathrm{supp}\, \varrho \) and that \(m'(E) > 0\). This means that \(m \vert _{f(J_i)}\) is increasing, and is therefore the inverse function of \(f \vert _{J_i}\). We conclude that f is a bijection from \(\bigcup _{i = 0}^n J_i\) onto \(\bigcup _{i = 0}^n f(J_i)\) with inverse function m (here we use the convention that for \(\phi \leqslant 1\) we have \(m(0) = \infty \) and \(f(\infty ) = 0\)). By definition, we have \(a_k = f(x_k)\) and the inverse relation \(x_k = m(a_k)\) follows by a simple limiting argument from \(\mathbb {C}_+\) to \(\mathbb {R}\). The case \(\phi = 1\) is handled similarly; we omit the minor modifications.

Finally, in order to prove the third sentence of Lemma 2.5 we have to show that \(a_{2p} \geqslant 0\) and \(a_1 \leqslant C\). It is easy to see that if \(a_{2p} < 0\) then there is an \(E < 0\) with  , which is impossible since

, which is impossible since  and \(\deg P_E = n+1\). Moreover, the estimate \(a_1 \leqslant C\) is easy to deduce from the definition of f and the estimates \(\phi \leqslant \tau ^{-1}\) and \(s_1^{-1} \geqslant \tau \). \(\square \)

and \(\deg P_E = n+1\). Moreover, the estimate \(a_1 \leqslant C\) is easy to deduce from the definition of f and the estimates \(\phi \leqslant \tau ^{-1}\) and \(s_1^{-1} \geqslant \tau \). \(\square \)

Proof of Lemma 2.6

In the proof of Lemma 2.5 we showed that if \(E \in (0,\infty ) \cap \bigcup _{i = 0}^n f(J_i)\) then \(E \!\notin \! \mathrm{supp}\, \varrho \). It therefore suffices to show that if \(E \in \bigcup _{k = 1}^p (a_{2k}, a_{2k - 1})\) then \(\mathrm{Im}\, m(E) > 0\). In that case the set of real preimages  has \(n - 1\) points (see Fig. 2). Since \(P_E\) has degree \(n+1\) and real coefficients, we conclude that \(P_E\) has a root with positive imaginary part. By the uniqueness of the root of \(P_{E + {{\mathrm {i}}} \eta }\) in \(\mathbb {C}_+\) (see (2.11)) and the continuity of the roots of \(P_{E + {{\mathrm {i}}} \eta }\) in \(\eta \), we therefore conclude by taking \(\eta \downarrow 0\) that \(\mathrm{Im}\, m(E) > 0\). \(\square \)

has \(n - 1\) points (see Fig. 2). Since \(P_E\) has degree \(n+1\) and real coefficients, we conclude that \(P_E\) has a root with positive imaginary part. By the uniqueness of the root of \(P_{E + {{\mathrm {i}}} \eta }\) in \(\mathbb {C}_+\) (see (2.11)) and the continuity of the roots of \(P_{E + {{\mathrm {i}}} \eta }\) in \(\eta \), we therefore conclude by taking \(\eta \downarrow 0\) that \(\mathrm{Im}\, m(E) > 0\). \(\square \)

For the following counting argument, in order to avoid extraneous complications we assume that \(\sigma _i > 0\) for all i. Recall the definition of \(N_k\) from (3.16).

Lemma A.1

Suppose that \(\sigma _j > 0\) for all j. Then \(N \varrho (\{0\}) = (N-M)_+\) and

Moreover, for \(k = 1, \ldots , p - 1\) we have

Proof

Suppose first that \(N > M\). Then \(x_{2p} < 0\) and \(a_{2p} > 0\) (see Fig. 2). Let \(1 \leqslant k \leqslant p\) and define \(\gamma \) as the positively oriented rectangular contour joining the points \(a_{2k - 1} \pm {{\mathrm {i}}}\) and \(a_{2k} \pm {{\mathrm {i}}}\). Then we have \(N_k = - \frac{N}{2 \pi {{\mathrm {i}}}} \oint _{\gamma } m(z) \, {{\mathrm {d}}} z\). We now do a change of coordinates by writing \(w = m(z)\). Define \(\tilde{\gamma }\) as the positively oriented rectangular contour joining the points \(x_{2k - 1} \pm {{\mathrm {i}}}\) and \(x_{2k} \pm {{\mathrm {i}}}\). Since the imaginary parts of w and z have the same sign, we conclude using Cauchy’s theorem that

where in the last step we used the definition of f from (2.12). Hence (A.5) follows. Note that we proved (A.5) also for \(k = p\). Now (A.4) follows easily using \(\sigma _i > 0\) for all i. Since \(\mathrm{supp}\, \varrho \subset \{0\} \cup [a_{2p}, a_{2p - 1}] \cup \cdots \cup [a_2,a_1]\) (recall Lemma 2.6), we get \(N = N \varrho (\{0\}) + \sum _{k = 1}^p N_k\). Therefore summing over \(k = 1, \ldots , p\) in (A.5) yields \(N \varrho (\{0\}) = N - M\), as desired. This concludes the proof in the case \(N > M\).

Next, suppose that \(N \leqslant M\). Then we deduce directly from (2.12) that \(\varrho (\{0\}) = 0\) (see Fig. 3). Hence, (A.4) follows. Finally, exactly as above, we get (A.5) for \(i = 1, \ldots , p-1\). This concludes the proof.

1.2 A.2. Stability near a regular edge

The rest of this appendix is devoted to the proofs of the assumption (3.20) and the stability of (2.11) in the sense of Definition 5.4. In fact, we shall prove the following stronger notation of stability, which will be needed to establish eigenvalue rigidity. Recall the definition of \(\kappa \) from (10.1).

Definition A.2

(Strong stability of (2.11) on \({\mathbf {S}}\)) We say that (2.11) is strongly stable on \({\mathbf {S}}\) if Definition 5.4 holds with (5.20) replaced by

That the notion of stability from Definition A.2 it is stronger than that of Definition 5.4 follows from the estimate

for \(z \in {\mathbf {S}}\), which we shall establish under the regularity assumptions of Definition 2.7. Under the same regularity assumptions, we shall also prove

for \(z \in {\mathbf {S}}\) and some constant \(c > 0\) depending only on \(\tau \). Using Lemma 4.10, we find that (A.8) implies \(|1 + m \sigma _i | \geqslant c\), which is the estimate from (3.20) (after a possible renaming of the constant \(\tau \)).

In this subsection we deal with a regular edge; the case of a regular bulk component is dealt with in the next subsection. We begin with basic estimates on the behaviour of f near a critical point.

Lemma A.3

Fix \(\tau > 0\). Suppose that the edge k is regular in the sense of Definition 2.7 (i). Then there exists a \(\tau ' > 0\), depending only on \(\tau \), such that

Proof

From Lemma 2.5 we get \(x_k = m(a_k)\). Since by assumption \(a_k \in [\tau ,C]\) for some constant C, we find from Lemma 4.10 that \(|x_k | \leqslant C\). The lower bound \(|x_i | \geqslant c\) follows from \(f'(x_k) = 0\) and (A.1), using that \(|s_i | \leqslant C\). This proves the first estimate of (A.9).

Next, from \(f'(x_k)\) and (A.1) we find

Using the first estimate of (A.9) and (2.14) we find from (A.10) that \(|f''(x_k) | \leqslant C\). Moreover for \(k \in \{1, 2p\}\) (i.e. \(x_k \in I_0 \cup I_1\)) all terms in (A.10) have the same sign, and it is easy to deduce that \(|f''(x_k) | \geqslant c\).

Next, we prove the lower bound \(|f''(x_k) | \geqslant c\) for \(k = 2, \ldots , 2p - 1\). Suppose for definiteness that k is odd. (The case of even k is handled in exactly the same way.) For \(x \in [x_k, x_{k-1}]\) we have \(f'(x) \geqslant 0\) and

where in the second step we used that \((-x^3 f''(x))' < 0\) as follows from (A.1), and in the last step the estimate \(|x_k | \asymp |x_{k-1} | \asymp 1\). We therefore find

Since \(a_{k-1} - a_k \geqslant \tau \) by the assumption in Definition 2.7 (i) and \(x_{k-1} - x_k \leqslant |x_{k-1} | + |x_k | \leqslant C\), we deduce the lower bound \(f''(x_k) \geqslant c\).

Finally, the last estimate of (A.9) follows easily from the assumption (2.14) and by differentiating (A.1).

We record the following easy consequence of (A.9). Recalling that \(f(x_k) = a_k\) and \(f'(x_k) = 0\), we find, using (A.9) and the fact that f is continuous in a neighbourhood of \(x_k\), that after choosing \(\tau ' > 0\) small enough (depending on \(\tau \)) we have

Lemma A.4

Fix \(\tau > 0\). Suppose that the edge k is regular in the sense of Definition 2.7 (i). Then there exists a constant \(\tau ' > 0\), depending only on \(\tau \), such that for \(z \in {{\mathbf {D}}}_k^e\) we have (A.7) and (A.8).

Proof

We first prove (A.7). From (A.11) we find that there exists a \(\tau ' > 0\) such that for \(|E - a_k | \leqslant \tau '\) we have

From (A.9) we find that \(|f''(x_k) | \geqslant c\). For definiteness, suppose that k is odd. Then \(\mathrm{Im}\, m(E) = 0\) for \(E \geqslant a_k\) (i.e. \(E \notin \mathrm{supp}\, \varrho \)). From (A.12) we therefore deduce that for \(E \leqslant a_k\) we have \(\mathrm{Im}\, m(E) \asymp \sqrt{|E - a_k |}\). We conclude that for \(|E - a_k | \leqslant \tau '\) we have the square root behaviour \(\mathrm{Im}\, m(E) \asymp \sqrt{\kappa (E)} {\mathbf {1}} (E \in \mathrm{supp}\, \varrho )\) for the density of \(\varrho \). Now (A.7) easily follows from the definition of the Stieltjes transform.

Finally, (A.8) follows from the assumption (2.14) and (A.11). \(\square \)

Lemma A.5

Fix \(\tau > 0\). Suppose that the edge k is regular in the sense of Definition 2.7 (i). Then there exists a constant \(\tau '\), depending only on \(\tau \), such that (2.11) is strongly stable on \({\mathbf {D}}^e_k\) in the sense of Definition A.2.

Proof

We take over the notation of Definition 5.4. Abbreviate  , so that \(|w(z) - z | \leqslant \delta (z)\).

, so that \(|w(z) - z | \leqslant \delta (z)\).

Suppose first that \(\mathrm{Im}\, z \geqslant \tau '\) for some constant \(\tau ' > 0\). Then, from the assumption \(\delta (z) \leqslant (\log N)^{-1}\) we get \(\mathrm{Im}\, w(z) \geqslant \tau '/2\), and therefore from the uniqueness of (2.11) we find \(u(z) = m(w(z))\). Hence,

where we used the trivial bound \(|m'(\zeta ) | \leqslant (\mathrm{Im}\, \zeta )^{-2}\). This yields (A.6) at z.

What remains therefore is the case \(|z - a_k | \leqslant 2 \tau '\), which we assume for the rest of the proof. We drop the arguments z and write the equation \(f(u) - f(m) = w - z\) as

where

Note that \(\alpha \) and \(\beta \) depend on z, and \(\alpha \) also depends on u. We suppose that

Then we claim that for small enough \(\tau '\) we have

In order to prove (A.16), we note that, by Lemma A.3, the statement of (A.11) holds under the assumption (A.15) provided \(\tau ' > 0\) is chosen small enough. Using (A.15), (A.8) (see Lemma A.3) , and (A.10) we get

Using (A.9) and (A.11) we conclude that for small enough \(\tau ' > 0\) we have \(|\alpha | \asymp 1\). This concludes the proof of the first estimate of (A.16).

In order to prove the second estimate of (A.16), we note that \(\beta = m^2 f'(m)\), so that for small enough \(\tau ' > 0\) we get from (A.11) and Lemma A.3 that

Using (A.11) and Lemmas A.3 and (4.10), we conclude for small enough \(\tau ' > 0\) that

This concludes the proof of the second estimate of (A.16).

Using (4.21) and the estimate \(|w - z | \leqslant \delta \), we write (A.13) as \(\alpha (u - m)^2 + \beta (u - m) = O(\delta )\). We now proceed exactly as in the proof of [7, Lemma 4.5], by solving the quadratic equation for \(u - m\) explicitly. We select the correct solution by a continuity argument using that (A.6) holds by assumption at \(z + {{\mathrm {i}}} N^{-5}\). The second assumption of (A.15) is obtained by continuity from the estimate on \(|u - m |\) at the neighbouring value \(z + {{\mathrm {i}}} N^{-5}\). We refer to [7, Lemma 4.5] for the full details of the argument. (Note that the fact \(\alpha \) depends on u does not change this argument. This dependence may be easily dealt with using a simple fixed point argument whose details we omit.) This concludes the proof. \(\square \)

1.3 A.3. Stability in a regular bulk component

In this subsection we establish the basic estimates (A.7) and (A.8) as well as the stability of (2.11) in a regular bulk component k, i.e. for \(z \in {{\mathbf {D}}}^b_k\).

Lemma A.6

Fix \(\tau , \tau ' > 0\) and suppose that the bulk component \(k = 1, \ldots , p\) is regular in the sense of Definition 2.7 (ii). Then for \(z \in {\mathbf {D}}^b_k\) we have (A.7) and (A.8). Moreover, (2.11) is strongly stable on \({{\mathbf {D}}}^b_k\) in the sense of Definition A.2.

Proof

The estimates (A.7) and (A.8) follow trivially from the assumption in Definition 2.7 (ii), using \(\kappa \leqslant C\) as follows from Lemma 2.5.

In order to show the strong stability of (2.11), we proceed as in the proof of Lemma A.5. Mimicking the argument there, we find that it suffices to prove that \(|\alpha | \leqslant C\) and \(|\beta | \asymp 1\) for \(z \in {\mathbf {D}}^e_k\), where \(\alpha \) and \(\beta \) were defined in (A.14) and u satisfies \(|u - m | \leqslant (\log N)^{-1/3}\).

Note that Definition 2.7 (ii) immediately implies that \(\mathrm{Im}\, m \geqslant c\) for some constant \(c > 0\). The upper bound \(|\alpha | + |\beta | \leqslant C\) easily follows from the definition (A.14) combined with Lemma 4.10. What remains is the proof of the lower bound \(|\beta | \geqslant c\). To that end, we take the imaginary part of (2.11) to get

Using that \(\mathrm{Im}\, m \geqslant c\), a simple analysis of the arguments of the expressions \((m + s_i^{-1})^2\) on the left-hand side of (A.17) yields

where in the last step we used (A.17). Recalling the definition of \(\beta \) from (A.14), we conclude that \(|\beta | \geqslant c\). This concludes the proof. \(\square \)

Remark A.7

The bulk regularity condition from Definition 2.7 (ii) is stable under perturbation of \(\pi \). To see this, define the shifted empirical density  , and the associated Stieltjes transform \(m_t(E)\) and function \(f_t(x)\). Differentiating \(f_t(m_t(E)) = E\) in t yields \((\partial _t m)_t(E) = - (\partial _t f)_t(m_t(E)) / (\partial _x f)_t(m_t(E))\). Using that

, and the associated Stieltjes transform \(m_t(E)\) and function \(f_t(x)\). Differentiating \(f_t(m_t(E)) = E\) in t yields \((\partial _t m)_t(E) = - (\partial _t f)_t(m_t(E)) / (\partial _x f)_t(m_t(E))\). Using that

by (A.8), and \((\partial _x f)_t(m_t(E)) = m(E)^{-2} \beta (E)\), we find from \(|\beta (E) | \asymp |m(E) | \asymp 1\) that \((\partial _t m)_0(E) = O(1)\) for \(E \in [a_{2k} + \tau ', a_{2k - 1} - \tau ']\). A simple extension of this argument shows that if Definition 2.7 (ii) holds for \(\pi \) then it holds for all \(\pi _t\) with t in some ball of fixed radius around zero.

1.4 A.4. Stability outside of the spectrum

In this subsection we establish the basic estimates (A.7) and (A.8) as well as the stability of (2.11) outside of the spectrum, i.e. for \(z \in {\mathbf {D}}^o\).

Lemma A.8

Fix \(\tau , \tau ' > 0\). Then for \(z \in {\mathbf {D}}^o\) we have (A.7) and (A.8). Moreover, (2.11) is strongly stable on \({\mathbf {D}}^o\) in the sense of Definition A.2.

Proof

Since m is the Stieltjes transform of a measure \(\varrho \) with bounded density (see Lemma 4.10), we find that

on \({\mathbf {D}}^o\). This proves (A.7).

We first establish (A.8) for \(z = E \in \mathbb {R}\) satisfying \(\mathrm{dist}(E, \mathrm{supp}\, \varrho ) \geqslant \tau '\). For definiteness, let \(z = E \in [a_{2l}, a_{2l+1}]\) for some l, and let \(i = 2, \ldots , n\) satisfy \(\{a_{2l}, a_{2l+1}\} = f(\partial J_i)\), where we recall the set  from the proof of Lemma 2.5. Then there exists an \(x \in J_i\) such that \(E = f(x)\). Moreover, from (A.1) we get \(f'(y) \leqslant y^{-2}\) for \(y \in \mathbb {R}\), so that for \(y \in J_i\) we have \(0 \leqslant f'(y) \leqslant C\) by (A.9). Since \(\mathrm{dist}(E, \{a_{2l}, a_{2l+1}\}) \geqslant \tau '\), we conclude that \(\mathrm{dist}(x, \partial J_i) \geqslant c\), which implies \(\mathrm{dist}(x, \partial I_i) \geqslant c\). The cases \(E > a_1\) and \(E < a_{2p}\) are handled similarly. This concludes the proof of (A.8) for \(z = E \in \mathbb {R}\) satisfying \(\mathrm{dist}(E, \mathrm{supp}\, \varrho ) \geqslant \tau '\).

from the proof of Lemma 2.5. Then there exists an \(x \in J_i\) such that \(E = f(x)\). Moreover, from (A.1) we get \(f'(y) \leqslant y^{-2}\) for \(y \in \mathbb {R}\), so that for \(y \in J_i\) we have \(0 \leqslant f'(y) \leqslant C\) by (A.9). Since \(\mathrm{dist}(E, \{a_{2l}, a_{2l+1}\}) \geqslant \tau '\), we conclude that \(\mathrm{dist}(x, \partial J_i) \geqslant c\), which implies \(\mathrm{dist}(x, \partial I_i) \geqslant c\). The cases \(E > a_1\) and \(E < a_{2p}\) are handled similarly. This concludes the proof of (A.8) for \(z = E \in \mathbb {R}\) satisfying \(\mathrm{dist}(E, \mathrm{supp}\, \varrho ) \geqslant \tau '\).

Next, we note that if \(\eta \geqslant \varepsilon \) for some fixed \(\varepsilon > 0\) then (A.8) is trivially true by (A.18). On the other hand, if \(\mathrm{Im}\, m \leqslant \varepsilon \) then we get

for \(\varepsilon \) small enough, where we used the case \(z = E \in \mathbb {R}\) satisfying \(\mathrm{dist}(E, \mathrm{supp}\, \varrho ) \geqslant \tau '\) established above and the estimate \(|m'(z) | = \bigl |\int \frac{1}{(x - z)^2} \, \varrho ({{\mathrm {d}}} x) \bigr | \leqslant C\) (since \(|x - z | \geqslant \tau '\) for \(z \in {\mathbf {D}}^o\) and \(x \in \mathrm{supp}\, \varrho \)). This concludes the proof of (A.8) for \(z \in {\mathbf {D}}^o\).

As in the proof of Lemma A.6, to prove the strong stability of (2.11) on \({\mathbf {D}}^o\), it suffices to show that \(|\alpha | \leqslant C\) and \(|\beta | \asymp 1\) for \(z \in {{\mathbf {D}}}^e_k\), where \(\alpha \) and \(\beta \) were defined in (A.14) and u satisfies \(|u - m | \leqslant (\log N)^{-1/3}\). The upper bound \(|\alpha | + |\beta | \leqslant C\) follows immediately from (A.8), and the lower bound \(|\beta | \geqslant c\) follows from the definition (A.14) combined with (A.17), (A.18), and Lemma 4.10. This concludes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Knowles, A., Yin, J. Anisotropic local laws for random matrices. Probab. Theory Relat. Fields 169, 257–352 (2017). https://doi.org/10.1007/s00440-016-0730-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-016-0730-4